Metropsis research edition

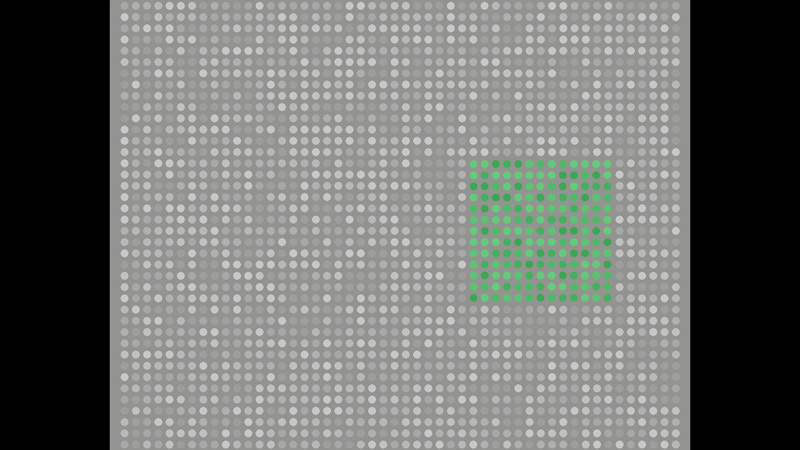

A complete toolbox for psychophysical assessment of visual function

Fast, accurate and more sensitive than standard tests

For normal, defective, paediatric, ageing and low vision populations

Metropsis is a complete toolbox

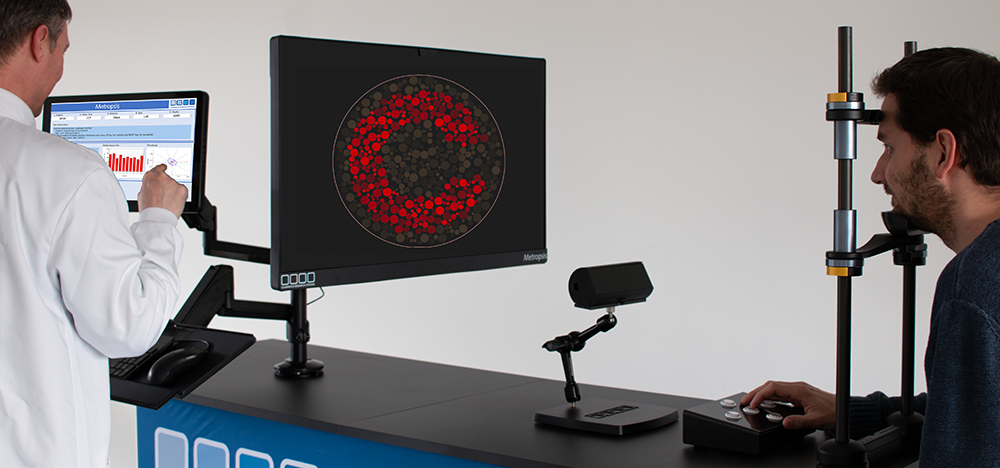

Metropsis is a complete test suite suitable for clinical, pre-clinical, drug trials, screening, sports science, applied vision and human factors research. Choose from a wide range of tests, including protocols designed for normal, paediatric, ageing and low vision populations – or we can develop a custom test for your project.

for faster more precise assessment of visual function

Metropsis is more sensitive than standard charts: ideal for investigating diseases of the eye and brain, as well as secondary effects of systemic disorders, such as cardiovascular disease or neurological function.

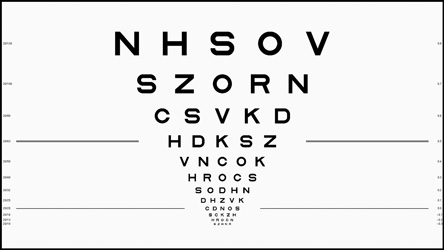

The research edition includes Metropsis Display++ technology (the screen displaying the ETDRS chart above) with a unique integrated sensor system, enabling the display to self-calibrate in real time. So you can be sure that your results are reliable and repeatable – even when performing long-term studies using multiple Metropsis systems across different sites.

trusted worldwide

Metropsis research edition is currently being used in clinical trials and natural history studies in research centres worldwide, including:

- Moorfields Eye Hospital, London

- Nuffield Laboratory of Ophthalmology, Oxford

- National Eye Institute, Bethesda

- Johnson & Johnson Vision Care

- Department of Clinical Neurosciences, University of Cambridge

- Kobe Eye Centre

- Tokyo Medical Centre

- L V Prasad Eye Institute, Hyderabad

- Kellogg Eye Centre, Ann Arbor

- Institut de la Vision, Paris

- Institute for Ophthalmic Research, Tubingen

- Emory Eye Centre, Atlanta

- Department of Ophthalmology, University of Colorado

- Department of Medicine and Optometry, Linnaeus University

- Faculty of Medicine, University of Coimbra

- University of Pennsylvania

- University of Nevada, Reno

- Azienda Ospedale Universita’ Padova, Oculistica Ospedale Sant’Antonio Padova, Centro di Ipovisione, Riabilitazione ortottica e Neuroftalmologia

- University of Latvia

- Karolinska Institutet, Stockholm

- Technological University Dublin

precision Display++ UHD technology

One of the key elements of Metropsis research edition is the calibrated 32” QLED LCD Display++ UHD Monitor, designed and manufactured exclusively by Cambridge Research Systems (see some earlier Display++ references below). Display++ UHD makes it easy to display calibrated visual stimuli with precision timing and provides excellent control of colour and contrast.

Reliable stimulus presentation

Off-the-shelf LCD panels suffer from large colour and luminance fluctuations. This may be acceptable for screening tests, but general purpose LCD displays are unsuitable for precision testing. Novel hardware corrections developed by our Staff Scientists ensure that Display++ UHD reliably presents calibrated test patterns on every trial.

Highly accurate colour reproduction and spatial uniformity

Display++ UHD reproduces colours with high accuracy (average CIE DE2000 < 0.3) and high spatial uniformity (over 95%) across the screen area. It offers 10-bit RGB native colour precision, up to 12-bit with Deep Colour Technology, allowing the investigator to obtain very fine contrast levels.

Self-calibrating monitor

Display++ UHD is calibrated at the factory and maintains its calibrated light output thanks to a built-in light sensor. The sensor is isolated by the ambient light, so changes in the ambient light will not affect the calibration of the monitor.

-

High quality 32” 4K UHD 3480×2160 IPS LCD panel with wide colour gamut Quantum Dot LED backlight

-

10-bit RGB native colour precision, up to 12-bit with Deep Colour technology

-

Contrast ratio 1000:1

-

Grey-to-grey response time 2ms

-

120Hz & 144Hz panel drive at 3480×2160 and 1920×1080

-

Real time calibration ensures accurate luminance, regardless of the effects of warm up and ageing

-

Hardware spatial uniformity correction, gamma correction, and CIE XYZ colour management system ensures accurate colour reproduction

-

Configurable wide dynamic range backlight, suitable for testing in photopic, mesopic and scotopic conditions; no filters required

-

Multiple synchronous TTL trigger outputs

-

Integrates with CRS audio, eye tracking and behavioural response devices, and compatible with solutions from other vendors

References

Kohl, C., Spieser, L., Forster, B., Bestmann, S., & Yarrow, K. (2019). The neurodynamic decision variable in human multi-alternative perceptual choice. Journal of cognitive neuroscience, 31(2), 262-277.

Ghodrati, M., Zavitz, E., Rosa, M. G., & Price, N. S. (2019). Contrast and luminance adaptation alter neuronal coding and perception of stimulus orientation. Nature communications, 10(1), 941.

DiMattina, C., & Baker, C. L. (2019). Modeling second-order boundary perception: A machine learning approach. PLoS computational biology, 15(3), e1006829.

Flynn, O. J., & Jeffrey, B. G. (2019). Scotopic contour and shape discrimination using radial frequency patterns. Journal of vision, 19(2), 7-7. Slattery, T. J., & Vasilev, M. R. (2019). An eye-movement exploration into return-sweep targeting during reading. Attention, Perception, & Psychophysics, 1-7.

Zhang, Q., & Li, S. (2019). The roles of spatial frequency in category‐level visual search of real‐world scenes. PsyCh journal. Smith, P. L., & Corbett, E. A. (2019). Speeded multielement decision-making as diffusion in a hypersphere: Theory and application to double-target detection. Psychonomic bulletin & review, 26(1), 127-162.

Takao, S., Clifford, C. W., & Watanabe, K. (2019). Ebbinghaus illusion depends more on the retinal than perceived size of surrounding stimuli. Vision research, 154, 80-84.

Wright, D., & Chouinard, P. A. (2019). Effects of multitasking and intention–behaviour consistency when facing yellow traffic light uncertainty. Attention, Perception, & Psychophysics, 1-18.

Beatty, P. J., Buzzell, G. A., Roberts, D. M., & McDonald, C. G. (2018). Speeded response errors and the error-related negativity modulate early sensory processing. Neuroimage, 183, 112-120.

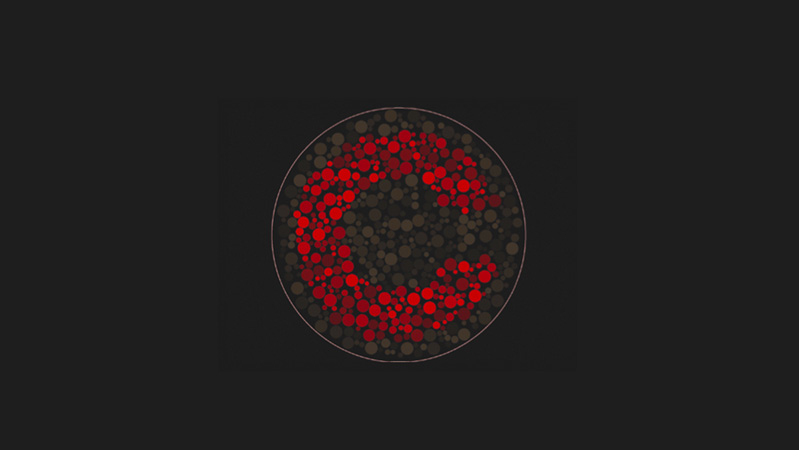

Kumaran, N., Ripamonti, C., Kalitzeos, A., Rubin, G. S., Bainbridge, J. W., & Michaelides, M. (2018). Severe Loss of Tritan Color Discrimination in RPE65 Associated Leber Congenital Amaurosis. Investigative ophthalmology & visual science, 59(1), 85-93.

Kawai, K., Chandler, D. M., & Ohashi, G. (2018, September). On the Role of Shaped-Noise Visibility for Post-Compression Image Enhancement. In International Conference on Global Research and Education (pp. 195-203). Springer, Cham.

Tamura, H., Higashi, H., & Nakauchi, S. (2018). Dynamic Visual Cues for Differentiating Mirror and Glass. Scientific reports, 8(1), 8403. doi: 10.1038/s41598-018-26720-x

Goettker, A., Braun, D. I., Schütz, A. C., & Gegenfurtner, K. R. (2018). Execution of saccadic eye movements affects speed perception. Proceedings of the National Academy of Sciences, 201704799 doi.org/10.1073/pnas.1704799115

Balsdon T, Clifford CWG. 2018 Visual processing: conscious until proven otherwise. R. Soc. open sci. 5: 171783.

Park, A. S., Bedggood, P. A., Metha, A. B., & Anderson, A. J. (2017). Masking of random-walk motion by flicker, and its role in the allocation of motion in the on-line jitter illusion. Vision research, 137, 50-60. doi.org/10.1016/j.visres.2017.06.003

Palmer, C. J., & Clifford, C. W. (2017). Perceived Object Trajectory Is Influenced by Others’ Tracking Movements. Current Biology, 27(14), 2169-2176 doi.org/10.1016/j.cub.2017.06.019

Mannion, D. J., Donkin, C., & Whitford, T. J. (2017). No apparent influence of psychometrically-defined schizotypy on orientation-dependent contextual modulation of visual contrast detection. PeerJ, 5, e2921 doi.org/10.7717/peerj.2921

Braun, D. I., Schütz, A. C., & Gegenfurtner, K. R. (2017). Visual sensitivity for luminance and chromatic stimuli during the execution of smooth pursuit and saccadic eye movements. Vision research, 136, 57-69. doi.org/10.1016/j.visres.2017.05.008

Vercillo, T., Carrasco, C., & Jiang, F. (2017). Age-Related Changes in Sensorimotor Temporal Binding. Frontiers in Human Neuroscience, 11, 500 doi.org/10.3389/fnhum.2017.00500

Agaoglu, M. N., & Chung, S. T. (2017). Interaction between stimulus contrast and pre-saccadic crowding. Royal Society open science, 4(2), 160559. http://doi.org/10.1098/rsos.160559

Valsecchi, M. & Gegenfurtner, K.R. (2016). Dynamic re-calibration of perceived size in fovea and periphery through predictable size changes. Current Biology, 26, 59–63

Souto, D., Gegenfurtner, K.R. & Schütz, A.C. (2016). Saccade adaptation and visual uncertainty. Frontiers in Human Neuroscience, 10:227 Braun, D. I., & Gegenfurtner, K. R. (2016). Dynamics of oculomotor direction discrimination. Journal of Vision, 16(13):4, 1–26, doi.org/10.1167/16.13.4

DiMattina, C. (2016). Comparing models of contrast gain using psychophysical experiments. Journal of Vision, 16(9):1, 1–18, doi.org/10.1167/16.9.1

Linxi Zhao, Caroline Sendek, Vandad Davoodnia, Reza Lashgari, Mitchell W. Dul, Qasim Zaidi, Jose-Manuel Alonso; Effect of Age and Glaucoma on the Detection of Darks and Lights. Invest. Ophthalmol. Vis. Sci. 2015;56(11):7000-7006. doi.org/10.1167/iovs.15-16753